Thursday, January 31, 2008

Best Practices for Date/Time Calculations in SQL Server

Worm Mitigation Technical Details (Cisco Systems)

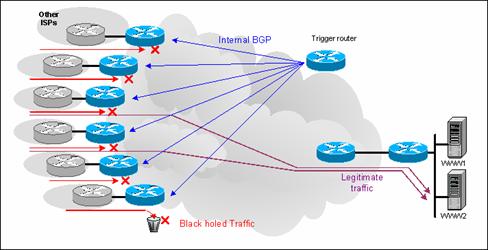

Remote-Triggered Black Hole Routing

router bgp 999

...

redistribute static route-map STATIC-TO-BGP

...

!

route-map STATIC-TO-BGP permit 10

match tag 66

set ip next-hop 192.0.2.1

set local-preference 50

set community no-export 999:000

set origin igp

!

Route-map STATIC-TO-BGP permit 20

!

...

ip route 171.xxx.xxx.1 255.255.255.255 Null0 Tag 66

!

Redistributing customer routes into BGP

For example, if you use static routing with your customers and want to redistribute the static routes into BGP, use the following configuration (I’ve used tag 123 to tag static routes that should get inserted into

BGP).

router bgp 65001

redistribute static route-map StaticToBGP

!

route-map StaticToBGP permit 10

match tag 123

set community no-export additive

When you configure a static route toward the IP subnet 10.1.2.0/24 …

ip route 10.1.2.0 255.255.255.0 Null0 tag 123

… it’s automatically inserted in the BGP table and marked with the no-export community:

R1#show ip bgp 10.1.2.0

BGP routing

table entry for 10.1.2.0/24, version 3

Advertised to

update-groups:

1

Local

0.0.0.0 from 0.0.0.0 (10.0.1.1)

Origin

incomplete, metric 0, localpref 100, weight 32768, valid, sourced,

best

Community: no-export

Wednesday, January 30, 2008

CompressorRater - Rate JavaScript Compression Utilities

JSMin is a conservative compressor, written several years ago by Douglas Crockford. It is considered safe (especially if you verify your code with JSLint first-- an excellent thing to do anyway) because it doesn't attempt to change any variable names.

Dojo shrinksafe is a very popular Java based JavaScript compressor that parses the JavaScript using the rhino library and crunches local variable names.

Packer by Dean Edwards, is also a very popular JavaScript compressor, that can go beyond regular compression and also add advanced on-the-fly decompression with a JavaScript runtime piece.

the YUI Compressor is a newer compressor written by Julien Lecomte, that aims to combine the safety of JSMin with the higher compression levels acheived by Dojo Shrinksafe. Like Dojo shrinksafe, it is written in Java and based on the rhino library

Reading Ad Hoc Text Files with OpenDataSource (SQL Server Central)

select * fromOpenDataSource('Microsoft.Jet.OLEDB.4.0', 'Data Source = C:\; Extended Properties = "Text;HDR=NO;"')...test1#txtFurther Reading:

http://msdn2.microsoft.com/en-us/library/aa276845(SQL.80).aspx - The MSDN general OpenDataSource reference page.

http://msdn2.microsoft.com/en-us/library/ms709353.aspx - The MSDN reference on the schema.ini file.

Tuesday, January 29, 2008

JavaScript Pretty Date

prettyDate("2008-01-28T20:24:17Z") // => "2 hours ago"

prettyDate("2008-01-27T22:24:17Z") // => "Yesterday"

prettyDate("2008-01-26T22:24:17Z") // => "2 days ago"

prettyDate("2008-01-14T22:24:17Z") // => "2 weeks ago"

prettyDate("2007-12-15T22:24:17Z") // => undefined

JavaScript Pretty Date

pretty.js (Also include a .prettyDate() jQuery plugin, for convenience.)

Demo (Some examples of date conversion using basic DOM manipulation.)

BGP Peer Session Templates

IOS releases 12.0S and 12.3T introduced peer templates, a scalable hierarchical way of configuring BGP session parameters and inbound/outbound policies. For example, to configure the session parameters for all your IBGP sessions, use the following session template:

router bgp 65001http://www.cisco.com/univercd/cc/td/doc/product/software/ios123/123newft/123t/123t_4/gtbgpdpg.pdf

template peer-session IBGP

remote-as 65001

description IBGP peers

password s3cr3t

update-source Loopback0

After the session template has been configured, adding a new IBGP peer takes just a single configuration command (two if you want to add neighbor description):

router bgp 65001

neighbor 10.0.1.2 inherit peer-session IBGP

neighbor 10.0.1.2 description R2

In Cisco IOS release 12.3T (integrated in 12.4), we've got an interesting (and quite understated) BGP feature: BGP peer-groups are no longer a performance feature (previously, IOS used them to reduce the time needed to compute outbound BGP updates). IOS now performs automatic grouping of BGP neighbors in dynamic update peer-groups (pdf) that receive identical BGP updates based on per-neighbor outbound parameters.

Monday, January 28, 2008

Friday, January 25, 2008

Securing Networks with Private VLANs and VLAN Access Control Lists (Cisco Wrap)

One of the key factors to building a successful network security design is to identify and enforce a proper trust model. The proper trust model defines who needs to talk to whom and what kind of traffic needs to be exchanged; all other traffic should be denied. Once the proper trust model has been identified, then the security designer should decide how to enforce the model. As more critical resources are globally available and new forms of network attacks evolve, the network security infrastructure tends to become more sophisticated, and more products are available. Firewalls, routers, LAN switches, intrusion detection systems, AAA servers, and VPNs are some of the technologies and products that can help enforce the model. Of course, each one of these products and technologies plays a particular role within the overall security implementation, and it is essential for the designer to understand how these elements can be deployed.

http://www.cisco.com/warp/public/473/90.pdf (pdf)

Large Object Storage in a Database or a Filesystem?

Available Documents: Word 153 Kb, PDF 159 Kb

Application designers often face the question of whether to store large objects in a filesystem or in a database. Often this decision is made for application design simplicity. Sometimes, performance measurements are also used. This paper looks at the question of fragmentation – one of the operational issues that can affect the performance and/or manageability of the system as deployed long term. As expected from the common wisdom, objects smaller than 256K are best stored in a database while objects larger than 1M are best stored in the filesystem. Between 256K and 1M, the read:write ratio and rate of object overwrite or replacement are important factors. We used the notion of “storage age” or number of object overwrites as way of normalizing wall clock time. Storage age allows our results or similar such results to be applied across a number of read:write ratios and object replacement rates.

Keywords: Database, File, Performance, Application

Configuring QoS Policy Propagation via Border Gateway Protocol

This chapter describes the tasks for configuring Policy Propagation via Border Gateway Protocol (BGP) on a router.

For complete conceptual information about this feature, see the section "QoS Policy Propagation via Border Gateway Protocol" in the chapter " Classification Overview" in this book.

Thursday, January 24, 2008

Configuring DN-Based Crypto Maps for VPN Device Access Control (Cisco Systems)

This document describes how to configure Distinguished Name (DN)-based crypto maps to provide access control so that a VPN device can establish VPN tunnels with a Cisco IOS® router. In this document's example, Rivest, Shamir, and Adelman (RSA) signature is the method for the IKE authentication. In addition to standard certificate validation, DN-based crypto maps try to match the peer's ISAKMP identity with certain fields in its certificates, such as the X.500 distinguished name or the fully qualified domain name (FQDN).

Configuring DN-Based Crypto Maps for VPN Device Access Control (pdf)

Configuring Dynamic Multipoint VPN Using GRE Over IPsec With OSPF, NAT, and Cisco IOS Firewall (Cisco Systems)

This document provides a sample configuration for Dynamic Multipoint VPN (DMVPN) using generic routing encapsulation (GRE) over IPsec with Open Shortest Path First (OSPF), Network Address Translation (NAT), and Cisco IOS® Firewall.

Configuring Dynamic Multipoint VPN Using GRE Over IPsec With OSPF, NAT, and Cisco IOS Firewall (pdf)

Monday, January 21, 2008

Cisco 7200 Series Routers Configuration Examples

Cisco 7200 Series Routers Configuration Examples and TechNotes (Cisco Systems)

Some links below may open a new browser window () to display the document you selected.

- Basic Router Configuration using SDM 03/Nov/2006

- Cisco IOS Certificate Enrollment Using Enhanced Enrollment Commands Configuration Example 17/Jan/2007

- Cisco Secure Desktop (CSD) on IOS Configuration Example using SDM 25/Oct/2006

- Clientless SSL VPN (WebVPN) on Cisco IOS with SDM Configuration Example 07/Dec/2006

- Configure and Enroll a Cisco IOS Router to Another Cisco IOS Router Configured as a CA Server 14/Jan/2008

- Configure and Enroll a Cisco VPN 3000 Concentrator to a Cisco IOS Router as a CA Server 14/Jan/2008

- Configuring a PC as a PPPoA Client Using L3 SSG/SSD 01/Jun/2005

- Configuring a Router IPsec Tunnel Private-to-Private Network with NAT and a Static 05/Jun/2006

- Configuring a Router-to-Router LAN-to-LAN Tunnel with a Router Initiating IKE Aggressive Mode 02/Feb/2006

- Configuring an IPsec Router Dynamic LAN-to-LAN Peer and VPN Clients 10/May/2007

- Configuring DN-Based Crypto Maps for VPN Device Access Control 19/Jan/2006

- Configuring Dynamic Multipoint VPN Using GRE Over IPsec With OSPF, NAT, and Cisco IOS Firewall 30/Nov/2006

- Configuring EtherChannel and 802.1Q Trunking Between Catalyst L2 Fixed Configuration Switches and a Router (InterVLAN Routing) 30/Aug/2005

- Configuring IOS-to-IOS IPSec Using AES Encryption 02/Feb/2006

- Configuring IPSec - Router to PIX 04/Nov/2002

- Configuring Router to VPN Client, Mode-Config, Wild-Card Pre-Shared Key with NAT 21/Nov/2006

- DMVPN and Easy VPN Server with ISAKMP Profiles Configuration Example 06/Dec/2006

- EIGRP Message Authentication Configuration Example 01/Mar/2007

- EzVPN Client and Server on the Same Router Configuration Example 08/Mar/2007

- Initial IPX Configuration and Troubleshooting Between Cisco Router and Novell Netware Server 01/Sep/2005

- Install Trusted Authority Certificates on Cisco IOS Routers for Trend URL Filtering Support 20/Apr/2007

- LAN-to-LAN IPsec Tunnel Between a Cisco VPN 3000 Concentrator and Router with AES Configuration Example 12/Mar/2007

- LAN-to-LAN IPsec Tunnel Between Two Routers Configuration Example 01/Oct/2006

- PIX 6.x: Dynamic IPsec Between a Statically Addressed IOS Router and the Dynamically Addressed PIX Firewall with NAT Configuration Example 08/Mar/2007

- PIX/ASA 7.x and IOS: VPN Fragmentation 12/Mar/2007

- PIX/ASA 7.x Security Appliance to an IOS Router LAN-to-LAN IPsec Tunnel Configuration Example 08/Mar/2007

- Router and VPN Client for Public Internet on a Stick Configuration Example 24/Apr/2007

- Site-to-Site Tunnel Between IOS Routers Using SEAL Sample Configuration 14/Jan/2008

- SSL VPN Client (SVC) on IOS with SDM Configuration Example 10/Jul/2007

- Telnet Password on Cisco Router to Avoid Error Message Configuration Example 03/Aug/2006

- Telnet, Console and AUX Port Passwords on Cisco Routers Configuration Example 02/Aug/2006

- Thin-Client SSL VPN (WebVPN) IOS Configuration Example with SDM 05/Sep/2006

- Using IOS Translation Rules - Creating Scalable Dial Plans for VoIP Networks 02/Feb/2006

- 7200 Easy VPN Server to 871 Easy VPN Remote Configuration Example 12/Mar/2007

IOS NAT Load-balancing for Two ISP Connections

This document describes a configuration for a Cisco IOS® router to connect a network to the Internet with Network Address Translation through two ISP connections. The Cisco IOS Software Network Address Translation (NAT) can distribute subsequent TCP connections and UDP sessions over multiple network connections if equal-cost routes to a given destination are available. In the event that one of the connections becomes unusable, object-tracking, a component of Optimized Edge Routing (OER), can be used to deactivate the route until the connection becomes available again, which assures network availability in spite of instability or unreliability of an Internet connection.

Saturday, January 19, 2008

Generating charts from accessible data tables and vice versa using the Google Charts API

This is why I wrote a small script that converts data tables to charts using the API and a wee bit of JavaScript.

Check out the demo page and download the script with the demo page and CSS to have a go with it yourself.

SparkLines in ASP.NET

SparkLine is a small, high resolution line chart. This article explains how to create and use SparkLines in your ASP.NET application.

Download SparkLine.zip - 4.1 KB

Gallery Server Pro - An ASP.NET Gallery for Sharing Photos, Video, Audio and Other Media

Gallery Server Pro is a complete, stable ASP.NET gallery for sharing photos, video, audio and other media. This article presents the overall architecture and major features.

Download source files - 3,986.5 KB

Online demo of Gallery Server Pro

System.Data.SQLite

System.Data.SQLite is an enhanced version of the original SQLite database engine. It is a complete drop-in replacement for the original sqlite3.dll (you can even rename it to sqlite3.dll). It has no linker dependency on the .NET runtime so it can be distributed independently of .NET, yet embedded in the binary is a complete ADO.NET 2.0 provider for full managed development.

RSS Newsfeed in TSQL using SQL Server's XML

Build an RSS newsfeed in TSQL, using the power of SQL Server's XML.

Read more...

Script Downloads:

Technology Newsfeeds

Simple Talk Newsfeeds

SQL Server Newsfeeds

Reading and Writing Files in SQL Server using T-SQL

SQL Server provides several "standard" techniques by which to read and write to files but, just occasionally, they aren't quite up to the task at hand – especially when dealing with large strings or relatively unstructured data. Phil Factor provides some T-SQL... Read more...

Creating cross tab queries and pivot tables in SQL

For those times when you absolutely, positively got to perform a cross tab query in SQL, Keith Fletcher's T-SQL stored procedure will allow you to do it "on the fly". You can add it to your database and start cross tabbing immediately, without any further setup or... Read more...

CODE DOWNLOAD

SQL Server development techniques and sql scripts ( Nigel Rivett)

Articles

sql server 2005 CTEs - sql server 2005 common-table expressions (CTEs)

sql server 2005 ssis exec - sql server 2005 executing ssis packages

sql server csv files - Creating csv files using bcp and stored procedures/

sql server 2005 SSIS FTP file rename - SSIS move/rename a file on an FTP site

TSQL

sp_executeSQL setting variables from dynamic sql

Retrieve Tree Hierarchy Retrieve formatted tree structure

Get table row counts Retrieve the number of rows in each table in a database

Find gaps in sequence numbers Find gaps in sequence numbers

Create text file Creating a text file from a stored procedure

spFormatOutputBuffer Retrieve a sql server error message

Access Temp Tables Across SPs Create a temp table in one SP and access from another

sp_CreateDataLoadScript Create a data insert script from a table

Create Script File Concatenate files to make single script

Move data using column definitions Move data from import table to production table using file format definition

Remove non-numeric characters Remove non-Numeric or non-alphameric characters from a string or field

Find non-alphameric characters Function to return all non-alphameric characters from a string in a table

Primary Key Columns Get all fields that are part of the primary key

Check if file exists find file - scripting object, xp_cmdshell, xp_fileexist

Cursors Advanced use of cursors in t-sql

Import Text Files Import and archive text files that arrive in a directory

f_GetEntryDelimiitted Get entries from csv string

fn_ParseCSVString Function to return a table from a delimitted (csv) string

bcp bcp using format file & quote delimitted strings

CSV String From Table Create delimitted string from table entries

BCP all tables BCP in and out data from all tables in a database

CrossTabs Crosstabs and pivot tables

s_ProcessAllFilesInDir Process all files in a directory

UpdateText Inserting text data to a table in 8000 byte chunks

Replace Text Search and place strings in a text column in a table

Table name as variable Accessing a table from a name in a variable

send email Send emails asynchronously

Importing Text-based data into SQL Server using TSQL

Importing Text-based data: Workbench (simple-talk.com)

How to import text files into SQL Server, without resorting to DTS or SSIS scripting. They go on to show how much can be done in TSQL.

Fast Import with the Quirky Update technique

CSV Importing- Comma-delimited and Comedy-Limited.

Unrotating a CSV Pivot-table on import

Download:

TheCode

Find Missing Date Ranges in SQL

Often, the quickest way to determine whether you have missing data in a table such a ledger or journal is to see if there are gaps in the dates where one wouldn't expect them. But how do you do that in an emergency, particularly in a large table, when every minute counts?

Download:

TSQL Source Code

Using Regular expressions with SQL Server via TSQL

TSQL Regular Expression Workbench (simple-talk.com)

Download TSQL Scripts:

RegexWorkbench.sql

RegexWorkbench2000.sql

A great deal can be done using commandline applications that work with regular expressions such as GREP and AWK. However, there are times where it is handy to use Regex directly from TSQL. There are two Regex engines available to SQL Server. These are

the .NET Regex which is in the system.text.regularexpression module

The ECMA Regex from VBScript.RegExp which is distributed with the IE browser and is used by Javascript and JScript.

Both of these are excellent standard implementations. Both work well in TSQL.

The .NET Regex requires the creation of CLR functions to provide regular expressions, and works only with SQL Server 2005, (and 2007) See CLR Integration by Christoffer Hedgate

The ECMA Regex can be used via VBScript.RegExp, which are available to SQL Server 2000 as well. The regex is compatible with Javascript.

The advantage of using CLR is that the regular expressions of the NET framework are very good, and performance is excellent. However, the techniques are well-known, whereas some of the more powerful uses of VBScript.RegExp have hardly ever been published, so this workbench will concentrate on the latter.

See Also:

SQL Home > T-SQL Programming

Managing Hierarchical Data in MySQL

Most users at one time or another have dealt with hierarchical data in a SQL database and no doubt learned that the management of hierarchical data is not what a relational database is intended for. The tables of a relational database are not hierarchical (like XML), but are simply a flat list. Hierarchical data has a parent-child relationship that is not naturally represented in a relational database table.

Accessing Distributed Data with the Federated Storage Engine (MySQL)

It's no secret that corporations are swimming in more data than ever before. IDC has recently pegged data growth at 48% a year, which means that corporate data nearly doubles every two years at many companies. In addition, purchased applications and custom built systems continue to bring in new databases that require attention, and business intelligence remains a red-hot area for modern enterprises with many companies building analytic data warehouses or data marts that continually feed information to key decision makers.

Improving Database Performance with Partitioning

A few years ago, I wrote an article entitled "The Foundation of Excellent Performance" (still available at http://www.tdan.com/i016fe03.htm) where I argued against the notion that SQL code was the number one contributor to performance in a database-driven system. Instead, I stated in the article that I firmly believed how good physical database design was far and away the leading component of superior database performance. In addition, I showed that Oracle's own research illustrated how poor design was the main culprit behind database downtime (planned or unplanned). In the years since then, I've not changed my stance and still think that any DBA who wants a high-performance database has got to invest in intelligent and savvy physical design to produce the kind of response times that make end users smile instead of scream.

Getting started with MySQL Proxy

The launch of MySQL Proxy has caused quite a commotion in the community. And with reason. For feature hungry people, this is undeniably the most exciting addition to MySQL set of tools.If the last statement has left you baffled, because you don't see the added value, don't worry. This article aims at giving you the feeling of what the Proxy can do.

MySQL Failover Strategy using State Management

Having a strategy for failover has become almost standard in the business world. Whether it is a total site failover from a NOC in one city to another, or just a simple redundant server. Over the years many technology solutions have been created for or adopted to MySQL to provide this type of strategy.

MySQL Failover Strategy using State Management, introducing MPP - Part 2

Now it's time to apply that concept to a load-balancer. In this Part 2 we will look at a strategy for using Linux Virtual Server with MPP for failover, and also take a closer look at the mechanics of MPP itself.

MySQL Failover Strategy using State Management, introducing MPP - Part 3

In this part 3 we will discuss how to configure and operate MPP, and additionally use MPP with MySQL Proxy to create a failover strategy.

Load and Search MySQL Data Using VB.NET 2005 in Windows Applications

MySQL data load and search are very important business requirements in any Windows or Internet web application development. In general, any application needs to show a result set of data and/or a single record to the end-users. In Windows applications it is very popular to show a result set of data by using the DataGridView, ListView or TreeView controls. A single record can be shown by the simple combination of the following controls: TextBox, ComboBox, ListBox, CheckBox, RadioButton, etc. MySQL data search is provided by using the required ADO.NET data objects and by refreshing the controls if necessary. These two processes, data load and search, should be fast and should be done with the proper code which depends on the controls in the Windows Form or Web Page. In this article I will show you how load and sort MySQL data using the DataGridView control. To search MySQL data the LIKE SQL operator will be used. Both programming implementations are done by using stored procedures for MySQL 5.0 database engine.

Using XML in MySQL 5.1 and 6.0

In this article, we discuss the XML functionality available in MySQL, with an emphasis on new features coming online in MySQL 5.1 and MySQL 6.0. We assume that you already have a working knowledge of XML, and that you know what the terms “valid” and “well-formed” mean. We also assume that you have some knowledge of XPath.

MySQL 5.1 partitions in practice

This article explains how to test the performance of a large database with MySQL 5.1, showing the advantages of using partitions. The test database uses data published by the US Bureau of Transportation Statistics. Currently, the data consists of ~ 113 million records (7.5 GB data + 5.2 GB index).

see also:

MySQL DevZone - Articles - Forge

Monday, January 14, 2008

Google Bookmarks FAQ

Bookmarklet

But to add bookmarks there's a very simple bookmarklet that should work on almost any browser. Just drag this to the links toolbar: Google Bookmark You'll also find it at the bottom of Google Bookmarks homepage.

Export my bookmarks from Google

Easy, just go to this page and you'll download a file called bookmarks.html, that can be imported in your browser. You can also export the bookmarks as a feed, but it won't be very useful unless you're a developer.

Bookmark favorite posts from Google Reader

Install Greasemonkey in Firefox, restart the browser and add this script. You'll see a new "Add bookmark" option at the bottom of each post.

Greasemonkey: Google Reader + Bookmarks

Adds reader posts to Google Bookmarks when clicked.

http://userscripts.org/scripts/show/6497

http://userscripts.org/scripts/review/6497 (Source for "Google Reader + Bookmarks")

http://userscripts.org/scripts/review/6497?format=txt

See also:

http://bookmarks.yahoo.com/

Sunday, January 13, 2008

simple pivot table in TSQL (SQL Server 2000)

select

t.myid as furnisher,

t1.myprice as price1_year,

t2.myprice price2_year from

(select distinct myid from mytable) t

join (select myid,myprice,rownum = (select count(*) from mytable t2 where t2.myid = t1.myid and t2.id <= t1.id) from mytable t1) t1 on

t.myid = t1.myid and t1.rownum = 1

join (select myid, myprice, rownum = (select count(*) from mytable t2 where t2.myid = t1.myid and t2.id <= t1.id) from mytable t1) t2 on

t.myid = t2.myid and t2.rownum = 2

order by 1

simple trick to get position of a record in TSQL

Suppose you have some table id and you want to know the row number of that id. Since there were deletes you can not rely on this field even it is an identity field. So here it goes:

select sum(1) from employee where emp_id <= @my_id

get table rowcount's using sysindexes in TSQL

original post: Row Count Using sysindexes System Table

Another alternative way to count the number of rows in the database tables is to make use of sysindexes system table. indid = 1 in this table means clustered index. In case the table does not have a clustered index, we are still able to count its rows, using indid = 0. It goes like so:

SELECT O.[name]

,I.rowcnt

FROM sysobjects O

JOIN sysindexes I

ON O.[id] = I.[id]

WHERE O.xtype = 'U'

AND O.status > 0

AND I.indid = 1

SQL Date Ranges UDF with CTE in TSQL

CREATE FUNCTION DateRange(@start DATETIME, @end DATETIME)

RETURNS TABLE

AS

RETURN (

WITH DateRange(CurrentDate) AS(

SELECT @start as CurrentDate

union all

SELECT DATEADD(day,1,CurrentDate)

FROM DateRange

WHERE CurrentDate < @end)

SELECT CurrentDate FROM DateRange );

' ------------------------------------------

usage:

SELECT CurrentDate FROM DateRange('20010101','20120901') OPTION(MAXRECURSION 10000)

Paging with ROW_NUMBER() in TSQL

In MSSQL 2000 we used to do paging either by dynamic sql or by some advanced

techniques like the example with rowcount.

In MSSQL 2005 with the introduction of ROW_NUMBER function life is a lot easier.

DECLARE @PageNum AS INT;

DECLARE @PageSize AS INT;

SET @PageNum = 2;

SET @PageSize = 10;

WITH OrdersRN AS

(

SELECT ROW_NUMBER() OVER(ORDER BY OrderDate, OrderID) AS RowNum

,OrderID

,OrderDate

,CustomerID

,EmployeeID

FROM dbo.Orders

)

SELECT *

FROM OrdersRN

WHERE RowNum BETWEEN (@PageNum - 1) * @PageSize + 1

AND @PageNum * @PageSize

ORDER BY OrderDate

,OrderID;

How to Insert DBCC Output into MSSQL Table

Sometimes it is useful to save DBCC maintenance routines into MSSQL server

table, so the info will be logged. Some DBCC commands support this directly via

the ...

INSERT INTO #table EXEC('DBCC ...')... type format (like for example DBCC USEROPTIONS), others don't

(like DBCC SHOWCONTIG). Fortunately there is some trick that will help

:)

DBCC can be used with WITH TABLERESULTS hint that outputs the output

in the table rowset format. So - here is a small code sample that demonstrates

the technique:

CREATE TABLE #x

(

f1 VARCHAR(50)

,f2 VARCHAR(50)

,indexname VARCHAR(50)

,indexid VARCHAR(50)

,f5 VARCHAR(50)

,f6 VARCHAR(50)

,f7 VARCHAR(50)

,f8 VARCHAR(50)

,f9 VARCHAR(50)

,f10 VARCHAR(50)

,f11 VARCHAR(50)

,f12 VARCHAR(50)

,f13 VARCHAR(50)

,f14 VARCHAR(50)

,f15 VARCHAR(50)

,f16 VARCHAR(50)

,bestcount VARCHAR(50)

,actualcount VARCHAR(50)

,logicalfragmentation VARCHAR(50)

,f20 varchar(50))

INSERT #x

EXEC('DBCC SHOWCONTIG(MyTable) WITH ALL_INDEXES, TABLERESULTS')

SELECT *

FROM #x

DROP TABLE #x

Using TOP with Subquery in TSQL

SQL Server 2005 introduced new TOP behavior - like TOP function supports variables and expressions.

As a result it is possible to use a subquery as input to TOP. Subquery should return scalar. Like so:

SELECT TOP(SELECT COUNT(*)

FROM Table2) Col1, Col2

FROM Table1

ORDER BY Col1

Which seems kinda strange for people with MSSQL 2000 experience. In addition

it is possible to use TOP with INSERT, UPDATE and DELETE like:

DELETE TOP(1000)

FROM Table1

WHERE Id < 10000

del.icio.us Button for Google Toolbar

For people who use both del.icio.us to save internet favorites and Google Toolbar within your browser:

I've created "Add to del.icio.us" button for your Google Toolbar using "Custom Buttons feature" of T4. Now it is possible to save your favorites with a single click. Installation also takes exactly one click:

Install del.icio.us button for Google Toolbar.

Predeployment I/O Best Practices (SQL Server Best Practices)

Summary: The I/O system is important to the performance of SQL Server. When configuring a new server for SQL Server or when adding or modifying the disk configuration of an existing system, it is good practice to determine the capacity of the I/O subsystem prior to deploying SQL Server. This white paper discusses validating and determining the capacity of an I/O subsystem. A number of tools are available for performing this type of testing. This white paper focuses on the SQLIO.exe tool, but also compares all available tools. It also covers basic I/O configuration best practices for SQL Server 2005.

Resources

SQLIO Disk Subsystem Benchmark Tool (Microsoft Download Center)

How to use the SQLIOSim utility to simulate SQL Server activity on a disk subsystem (Knowledge Base article)

IOMeter (IOMeter site)

SQL ISV blog (Microsoft SQL ISV Program Management Team)

SQL Server CAT Blog (Microsoft SQL Server Development Customer Advisory Team)

SQL Server Best Practices (TechNet site)

SQL Server AlwaysOn Partner Program (Microsoft SQL Server site)

SQL Server Urban Legends (Product Services and Support (PSS) Engineers blog)

SQL Server 2000 I/O Basics white papers

SQL Server 2000 I/O Basics

SQL Server I/O Basics, Chapter 2

Disk Subsystem Performance Analysis for Windows (Windows Hardware Developer Center)

Storport in Windows Server 2003: Improving Manageability and Performance in Hardware RAID and Storage Area Networks (Windows Storage Server white paper)

dynamic crosstab queries in TSQL (SQLMag.com)

Dynamic Crosstab Queries (SQLMag.com)

PIVOT SQL Server 2005 Syntax...

read the whole article:

PIVOT SQL Server 2005 Syntax, Using pivot table in ms access, Sql Server Pivot Table, what is a pivot table, pivot table tutorial

How do I load text or csv file data into SQL Server with BULK INSERT in TSQL

BULK INSERT examples to import CSV / TSV files into SQL Server.

CSV file

BULK INSERT OrdersBulkTSV file, tab-separated values

FROM 'c:\file.csv'

WITH

(

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

BULK INSERT OrdersBulk---

FROM 'c:\file.csv'

WITH

(

FIELDTERMINATOR = '\t',

ROWTERMINATOR = '\n'

)

BULK INSERT OrdersBulkFIRSTROW parameter

FROM 'c:\file.csv'

WITH

(

FIELDTERMINATOR = '\t',

ROWTERMINATOR = '\t\n'

)

BULK INSERT OrdersBulk---

FROM 'c:\file.csv'

WITH

(

FIRSTROW = 2,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

BULK INSERT OrdersBulk---

FROM 'c:\file.csv'

WITH

(

FIRSTROW = 2,

MAXERRORS = 0,

FIELDTERMINATOR = ',',

ROWTERMINATOR = '\n'

)

How do I get a list of SQL Server tables and their row counts?

1.)

EXEC sp_spaceused 'tablename'

2.) To get an *approximate* count for all tables, you can use the following:

SELECT [TableName] = so.name, [RowCount] = MAX(si.rows)

FROM sysobjects so, sysindexes si

WHERE so.xtype = 'U' AND si.id = OBJECT_ID(so.name)

GROUP BY so.name

ORDER BY 2 DESC

3.) Undocumented methods:

sp_MSForEachTable

CREATE PROCEDURE dbo.listTableRowCounts AS

BEGIN

SET NOCOUNT ON

DECLARE @SQL VARCHAR(255)

SET @SQL = 'DBCC UPDATEUSAGE (' + DB_NAME() + ')'

EXEC(@SQL)

CREATE TABLE #foo (

tablename VARCHAR(255),

rc INT )

INSERT #foo

EXEC sp_msForEachTable 'SELECT PARSENAME(''?'', 1), COUNT(*) FROM ?'

SELECT tablename, rc FROM #foo ORDER BY rc DESC

DROP TABLE #foo

END

Replicating 'Taskpad / Table Info' view

CREATE PROCEDURE dbo.allTables_SpaceUsed

AS

BEGIN

SET NOCOUNT ON

DBCC UPDATEUSAGE(0)

CREATE TABLE #t

(

id INT,

TableName VARCHAR(32),

NRows INT,

Reserved FLOAT,

TableSize FLOAT,

IndexSize FLOAT,

FreeSpace FLOAT

)

INSERT #t EXEC sp_msForEachTable 'SELECT

OBJECT_ID(PARSENAME(''?'',1)),

PARSENAME(''?'',1),

COUNT(*),0,0,0,0 FROM ?'

DECLARE @low INT

SELECT @low = [low] FROM master.dbo.spt_values

WHERE number = 1

AND type = 'E'

UPDATE #t SET Reserved = x.r, IndexSize = x.i FROM

(SELECT id, r = SUM(si.reserved), i = SUM(si.used)

FROM sysindexes si

WHERE si.indid IN (0, 1, 255)

GROUP BY id) x

WHERE x.id = #t.id

UPDATE #t SET TableSize = (SELECT SUM(si.dpages)

FROM sysindexes si

WHERE si.indid < 2

AND si.id = #t.id)

UPDATE #t SET TableSize = TableSize +

(SELECT COALESCE(SUM(used), 0)

FROM sysindexes si

WHERE si.indid = 255

AND si.id = #t.id)

UPDATE #t SET FreeSpace = Reserved - IndexSize

UPDATE #t SET IndexSize = IndexSize - TableSize

SELECT

tablename,

nrows,

Reserved = LTRIM(STR(

reserved * @low / 1024.,15,0) +

' ' + 'KB'),

DataSize = LTRIM(STR(

tablesize * @low / 1024.,15,0) +

' ' + 'KB'),

IndexSize = LTRIM(STR(

indexSize * @low / 1024.,15,0) +

' ' + 'KB'),

FreeSpace = LTRIM(STR(

freeSpace * @low / 1024.,15,0) +

' ' + 'KB')

FROM #t

ORDER BY 1

DROP TABLE #t

END

SQL Server System Stored Procedures

SQL Server contains a wealth of features that you can use in your own stored procedures and applications. This article explores a couple dozen of my favorite system stored procedures, including extended stored procedures and several that are undocumented.

sp_monitor

Let's get started with an easy one. sp_monitor displays statistics about the instance of SQL Server in which it runs. It takes no parameters and returns all kinds of interesting information, including how busy SQL Server has kept the machine's CPU, the number of input and output packets the server has processed, the number of errors it has encountered, a count of reads and writes, and the number of successful and attempted logins.

When you run this stored procedure from the master database. It reports each statistic as a number in the form of either number(number)-number% or number(number). The first number is since SQL Server restarted and the second is since sp_monitor was last run, so you can accumulate and explore statistics over time, and some values also provide the percentage difference between the two.

sp_bindsession

Related to: sp_getbindtoken

Support for transactions is one of the most important reasons to use database engines such as SQL Server; hopefully you make frequent, wise use of them in your applications. But did you know that you can bind operations on different connections in the same instance of SQL Server to the same transaction? This is conceptually a little like using the Distributed Transaction Coordinator to bind transactions across heterogeneous database engines. Once you let your imagination run free, it is easy to find a lot of uses for sp_bindsession and its sibling sp_getbindtoken.

A complete code example to demonstrate the use of sp_bindsession is a bit too complex to include here, but you can find a great example here. The idea goes something like this: Start by grabbing a session token using sp_getbindtoken.

DECLARE @bindToken varchar(255) EXECUTE sp_getbindtoken @bindToken OUTPUT SELECT @bindToken AS Tokensp_create_removable

Related to: sp_certify_removable

Have you ever needed to put a SQL Server database on a read-only media, such as a CD? Then you need sp_create_removable, which creates a removable media database. The stored procedure creates three or more files for the system catalog tables, transaction log, and one or more for the data tables, and places the database on those files. You can't use CREATE DATABASE or Enterprise Manager to create the removable database. You'll need to run sp_create_removable with sysadmin privileges.

Below is an example of using this stored procedure to create a WxmanagerRem removable database, specifying the size of each of the component file types. sp_certify_removable verifies that the resulting database is configured correctly to be read-only.

sp_create_removable 'WxManagerRem', 'WxManagerRemSYS', 'E:\WxManagerRem.mdf', 4, 'WxManagerRemLog', 'E:\WxManagerRem.LDF', 2, 'WxManagerRemData', 'E:\WxManagerRem.ndf', 4 GO sp_certify_removable 'WxManagerRem', 'auto'sp_addmessage

Related to: sp_altermessage, sp_dropmessage

SQL Server contains a rich set of error messages that it uses to annoy users, administrators, and developers. Actually, SQL Server does a pretty decent job of letting you know exactly what went wrong with an errant T-SQL operation, and most development tools let you respond to problems and perhaps retry operations. There are hundreds of standard messages, many of which are formatted to allow parameter substitution for customized feedback, such as to specify the objects involved in the problem.

But sometimes it is handy to go beyond the standard messages provided by SQL Server. That's where sp_addmessage comes in. Once you create your own message with a unique error number, you can raise that error from your own stored procedures. Using sp_altermessage, you can even customize the contents of built-in messages. Be careful with this latter technique, since you have to be careful not to break something that SQL Server does with the messages!

Calling sp_addmessage is easy.

USE master EXEC sp_addmessage @msgnum = 60000, @severity = 16, @msgtext = N'The item named %s already exists in %s.', @lang = 'us_english'You can drop all instances of a message number, no matter how many language variations you have, with the sp_dropmessage stored procedure and the all option.

EXEC sp_dropmessage 60000, 'all'A useful technique when you need utmost flexibility in a T-SQL application is to build the code dynamically and execute it on the fly. Microsoft has made such dynamic execution far more efficient than in earlier versions of SQL Server, such as by caching the execution plan for multiple executions. The danger, of course, is that building a SQL statement on the fly and concatenating parameter values is that it opens up the app to SQL injection attacks, so it should be used with caution and careful validation of any user-controllable input. Dynamic SQL also has different security issues. SQL Server will always validate the user's permissions on the underlying objects, even though there is an unbroken ownership chain from the stored procedure through objects referenced.

You can execute SQL dynamically using either the EXECUTE T-SQL statement or the sp_executesql stored procedure. sp_executesql is the more flexible of the two though, because it supports using parameters. The unlimited, comma-delimited list of parameters can include the data type so that SQL Server will do data type validation.

Listing 1 shows an example of sp_executesql that reads data from the Customers table in the Northwind database. This example uses a string of parameter names and data types in the @ParmDefinition string, including both a single input and a single output value. The single input parameter is in the @CustomerID variable. Notice too how the crux of the code, where the dynamic SQL is actually executed, uses the EXECUTE statement to actually run the code. This is an interesting example of combining the two statements that shows how each is used.

One thing you have to be careful of with dynamic SQL is the context of the statements. Consider this code.

USE pubs GO sp_executesql N'USE Northwind' GO SELECT * FROM Shippers GOsp_server_info

Sometimes it is handy to find out things about the environment you're running in. sp_server_info returns a list of attributes about the SQL Server instance, the database gateway in use, or the underlying data source. The attributes returned include such mundane things as OWNER_TERM for what the database calls an owner (hopefully it is not a shock that it is "owner" for SQL Server), maximum length of table and other object names, whether certain operations are permitted, and the size of things such as the maximum number of columns in an index.

You can call sp_server_info in either of two ways. Without parameters, it returns a recordset with the complete list of attributes, including the attribute ID (an int), its name, and the current value. Or you can specify the attribute ID to return a single record with the attribute's information.

sp_server_info sp_server_info @attribute_id = 2This is one of those rather interesting anomalies in SQL Server. According to BOL, sp_procoption "sets procedure options." The interesting part is that "s" at the end of "options." Despite the description, sp_procoption sets exactly one option to configure a stored procedure for auto execution when SQL Server starts up. Only objects in the master database owned by dbo can have their startup setting changed, and that change is restricted to stored procedures with no parameters.

It is easy enough to use.

USE master -- only in master GO EXEC sp_procoption 'sp_IndexRebuild', 'startup', 'true'According to the latest beta documentation I have for SQL Server 2005, there is still only going to be a single option for this procedure in the next version. One can only guess at why there is such a procedure seemingly designed for multiple options! It is particularly strange since this procedure replaced two other system stored procedures, sp_makestartup and sp_unmakestartup, in older versions of SQL Server. If anyone knows the story about this one trick pony, I'd love to hear it.

Do you ever stop in the middle of an intense T-SQL coding session and try to remember some arcane feature of one of the T-SQL data types? Maybe you can't remember the exact number of bytes that an image type can hold, or whether a varbinary is 8,000 or 8,192 bytes. You can fire up BOL, or you can run sp_datatype_info.

This system stored procedure returns just about everything you need to know about the data types available in any SQL Server database. The list includes types that are used as identity fields, a couple of arcane types, and any user-defined data types in the current database. I just ran it on my development machine in master and ended up with 51, including some user defined types that are still in there from when I added some objects and types to a database after forgetting to change the current database. (Sigh).

Anyway, the information returned includes the internal type name and id, the precision of numeric types (essentially the number of digits), the characters used for literal values (such as ' for strings and $ for money), whether it is nullable, and plenty more.

Calling the procedure is straightforward. For a complete list of types, pass either a null or no parameter. For a single type, pass in the id value.

sp_datatype_info sp_datatype_info -11Related to: sp_MSdependencies*

One of the things that drives me nuts sometimes about relational databases is the incredible weave of dependencies in any non-trivial database. The problem commonly rears its ugly head when I try to delete an object only to find out that it has all kinds of stuff that depends on that object, such as how foreign keys and stored procedures depend on the existence of a particular table. Usually I look at the error message, go delete what it tells me is the problem dependency, try to delete the object again, go delete the dependencies, and start over. Not a great way to make use of my time.

|

ISNULL vs. COALESCE speed test in TSQL

IsNull (col1, 'somevalue') vs Coalesce(col1, 'somevalue')

so i did a little testing. first i inserted 500.000 rows into a table with 5 columns:

create table TestTable (col1 varchar(50), col2 varchar(50), col3

varchar(50), col4 varchar(50), col5 varchar(50))

in which for each 100k rows one column was filled with 50 chars and the rest

were null.

then i ran both of the statements (one for coalesce and one for isnull) 10

times and took 10 elapsed time measures. Out of those 10 elapsed times i didn't

use the 2 that were most deviated from the rest:. Then i put the 2 select

statements into a stored procedure and did the same thing. I didn't take into

account the first run of each sproc because of the execution plan calculation.

What surprised me is that the stored procedure versions were slower by 4 seconds

in average. weird... if any one can explain that i'd be glad to know.

I would really like to know how IsNull works internally, because COALESCE

works like this:

COALESCE(expression1,...n) is

equivalent to this CASE function:

CASE

WHEN

(expression1 IS NOT NULL) THEN expression1

...

WHEN (expressionN IS NOT NULL) THEN expressionN

ELSE

NULL

that is directly from BOL. So my guess is that IsNull has something slower

inside which is surprising acctually.

I ran all this on a box with Microsoft SQL Server 2000 - 8.00.760

(Intel X86), Windows Server 2003, 512 Mb of RAM and 864 MHz CPU

RESULTS:

| coalesce | isnull | |

| No Sproc | 23258 ms | 27613 ms |

| Sproc | 27255 ms | 31962 ms |

The whole script:

use northwind

if

object_id('tempdb..#temp') is not null

drop table #temp

declare @var varchar(50)

set @var

= 'fberjhreaugheagh954wz645whla2309563498743wzherusbr'

select t1.col1,

t1.col2, t1.col3, t1.col4, t1.col5

into #temp

from

(

select @var

col1, null col2 , null col3, null col4, null col5 union all

select null col1,

@var col2 , null col3, null col4, null col5 union all

select null col1, null

col2 , @var col3, null col4, null col5 union all

select null col1, null col2

, null col3, @var col4, null col5 union all

select null col1, null col2 ,

null col3, null col4, @var col5

) t1

cross join

(

select @var col1,

null col2 , null col3, null col4, null col5 union all

select null col1, @var

col2 , null col3, null col4, null col5 union all

select null col1, null col2

, @var col3, null col4, null col5 union all

select null col1, null col2 ,

null col3, @var col4, null col5 union all

select null col1, null col2 , null

col3, null col4, @var col5

) t2

cross join

(

select @var col1, null

col2 , null col3, null col4, null col5 union all

select null col1, @var col2

, null col3, null col4, null col5 union all

select null col1, null col2 ,

@var col3, null col4, null col5 union all

select null col1, null col2 , null

col3, @var col4, null col5 union all

select null col1, null col2 , null col3,

null col4, @var col5

) t3

cross join

(

select @var col1, null col2 ,

null col3, null col4, null col5 union all

select null col1, @var col2 , null

col3, null col4, null col5 union all

select null col1, null col2 , @var col3,

null col4, null col5 union all

select null col1, null col2 , null col3, @var

col4, null col5 union all

select null col1, null col2 , null col3, null col4,

@var col5

) t4

cross join

(

select @var col1, null col2 , null col3,

null col4, null col5 union all

select null col1, @var col2 , null col3, null

col4, null col5 union all

select null col1, null col2 , @var col3, null col4,

null col5 union all

select null col1, null col2 , null col3, @var col4, null

col5 union all

select null col1, null col2 , null col3, null col4, @var

col5

) t5

if

object_id('TestTable') is not null

drop table TestTable

create table

TestTable (col1 varchar(50), col2 varchar(50), col3 varchar(50), col4

varchar(50), col5 varchar(50))

go

set ROWCOUNT

100000

go

insert into TestTable

select t1.col1, t1.col2, t1.col3,

t1.col4, t1.col5

from #temp t1, #temp t2

where t1.col1 is not

null

go

insert into TestTable

select t1.col1, t1.col2, t1.col3,

t1.col4, t1.col5

from #temp t1, #temp t2

where t1.col2 is not

null

go

insert into TestTable

select t1.col1, t1.col2, t1.col3,

t1.col4, t1.col5

from #temp t1, #temp t2

where t1.col3 is not

null

go

insert into TestTable

select t1.col1, t1.col2, t1.col3,

t1.col4, t1.col5

from #temp t1, #temp t2

where t1.col4 is not

null

go

insert into TestTable

select t1.col1, t1.col2, t1.col3,

t1.col4, t1.col5

from #temp t1, #temp t2

where t1.col5 is not

null

go

set ROWCOUNT 0

go

create procedure

spTestTableCoalesce

as

declare @testValue varchar(50)

set @testValue =

'this is a 50 char sentence.i have no other idea :)'

select

coalesce(col1, @testValue) as col1,

coalesce(col2, @testValue)

as col2,

coalesce(col3, @testValue) as col3,

coalesce(col4,

@testValue) as col4,

coalesce(col5, @testValue) as col5

from

TestTable

go

create procedure

spTestTableIsNull

as

declare @testValue varchar(50)

set @testValue =

'this is a 50 char sentence.i have no other idea :)'

select

isnull(col1, @testValue) as col1,

isnull(col2, @testValue) as

col2,

isnull(col3, @testValue) as col3,

isnull(col4,

@testValue) as col4,

isnull(col5, @testValue) as col5

from

TestTable

go

-- this is so my disk space doesn't

go to zero...

DBCC SHRINKDATABASE ('Northwind', 10)

go

select count(*) as RecordCount from

TestTable

go

set statistics time

on

--ran 10 times

declare @testValue

varchar(50)

set @testValue = 'this is a 50 char sentence.i have no other idea

:)'

select coalesce(col1, @testValue) as col1,

coalesce(col2,

@testValue) as col2,

coalesce(col3, @testValue) as col3,

coalesce(col4, @testValue) as col4,

coalesce(col5,

@testValue) as col5

from TestTable

go

--ran 10 times

declare

@testValue varchar(50)

set @testValue = 'this is a 50 char sentence.i have no

other idea :)'

select isnull(col1, @testValue) as col1,

isnull(col2, @testValue) as col2,

isnull(col3, @testValue)

as col3,

isnull(col4, @testValue) as col4,

isnull(col5,

@testValue) as col5

from TestTable

--ran 10 times

exec

spTestTableCoalesce

go

--ran 10 times

exec

spTestTableIsNull

go

drop table #temp

drop table

TestTable

drop procedure spTestTableIsNull

drop procedure

spTestTableCoalesce